Here’s a dirty little secret, AI Engineers are not Web Developers. That is, developers of AI tools come from an AI background, not a web/API background. The reality of these bespoke roles create a gaping hole for security vulnerabilities and makes AI systems innately insecure.

I know, scary right? But that’s the truth.

The result of bespoke software disciplines and security-last principles has an impact. I know, that’s not your organization, right? You’re “security first”, right? 🤣

Here are some vulnerabilities that make my case for me…

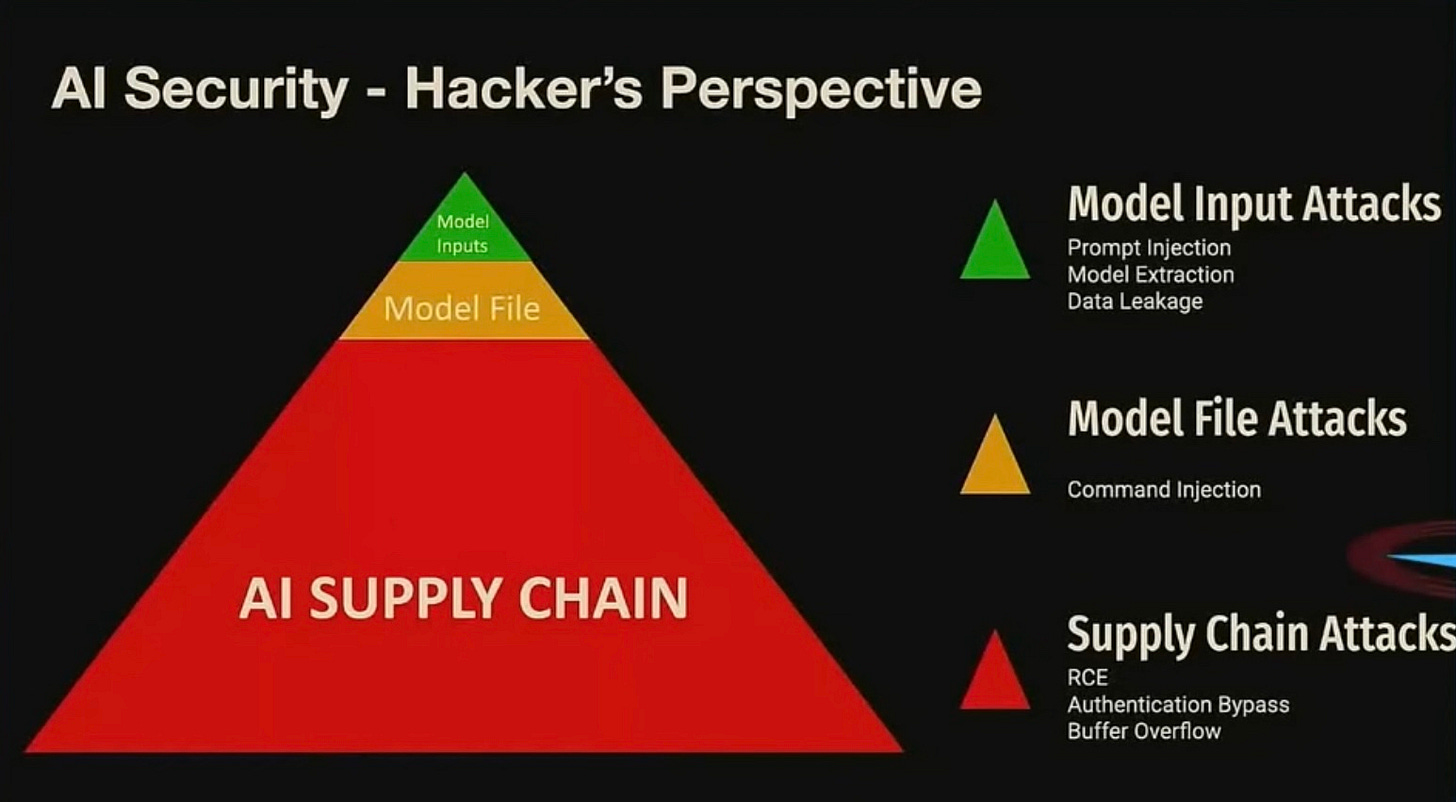

AI Security from a Hacker's Perspective

Zero-day vulnerabilities in AI systems can be categorized based on their location in the AI pipeline.

There are three primary attack vectors:

1. Model Input Attacks: These involve manipulating the data inputs to an AI model to influence its behavior or extract sensitive information. Common methods include prompt injection, model extraction, and data leakage.

2. Model File Attacks: These attacks target the files containing the AI models, such as injecting malicious commands into the model files.

3. Supply Chain Attacks: These broader attacks affect the entire AI supply chain, including remote code execution (RCE), authentication bypass, and buffer overflow vulnerabilities.

Patterns in AI Vulnerabilities

The rush to develop and deploy AI technologies often leads to several recurring vulnerabilities:

Lack of Authentication / Authorization: Many AI tools lack proper authentication mechanisms, making unauthorized access easier.

Excessive File System Access: AI systems frequently have broad read/write permissions, increasing the risk of exploitation.

Insecure Network Configurations: Poor network security settings can expose AI models to external threats.

Insecure Model Storage Formats: Storing models in easily tampered formats can lead to unauthorized modifications.

Unsanitized User Inputs: Failure to properly sanitize inputs can lead to various injection attacks.

Your Favorite Tools and their Vulnerabilities

Several popular AI tools have specific vulnerabilities. Say it ain’t so?!

MLflow: An open-source platform for managing the end-to-end machine learning lifecycle. It has vulnerabilities such as Local File Inclusion (LFI) and Remote Code Execution (RCE) via the `get-artifact` API call. LFI allows attackers to include files on a server through the web browser, and RCE allows attackers to execute arbitrary code on a remote machine.

Kubeflow: An open-source toolkit for running Machine Learning (ML) workloads on Kubernetes. It has vulnerabilities like Cross-Site Scripting (XSS) and Server-Side Request Forgery (SSRF) via the `artifacts/get` API call. XSS allows attackers to inject malicious scripts into web pages viewed by others, and SSRF tricks the server into making requests to unintended locations, potentially leaking information.

GitHub: The platform used for version control and collaboration. It has vulnerabilities related to Remote Code Execution (RCE) via the `GetArtifact` API call, where attackers can execute arbitrary code on the server.

A Realistic Attack Scenarios

Attack scenarios demonstrate how external and internal attackers can exploit AI vulnerabilities:

External Attacker: Uses tools like LinkedIn and theHarvester.py to gather information and execute a JavaScript drive-by attack to bypass firewalls and gain network access.

Internal Pentester: Uses vulnerability scanners and tools like Nmap to find and exploit weaknesses in AI libraries such as MLflow, H2O, and Ray.

Done. It’s that easy.

Strategies for Hunting Zero Days

Proactive Security Measures: Adopting a multi-layered security approach, including intrusion detection systems (IDS), regular security audits, and stringent access controls, can significantly reduce the risk of zero-day exploits. Ensuring that all AI models and infrastructure components are regularly updated and patched is essential.

Bug Bounty Programs: Encouraging the discovery and responsible reporting of vulnerabilities through structured programs like Trend Micro’s Zero Day Initiative fosters collaboration between independent researchers and organizations. These programs provide incentives for discovering and reporting vulnerabilities responsibly.

Collaborative Defense: The broader cybersecurity community, including vendors, researchers, and regulatory bodies, must work together to identify and mitigate zero-day vulnerabilities. Sharing threat intelligence and collaborating on best practices can enhance the overall security posture of AI systems.

Securing the AI Supply Chain: Every link in the AI supply chain, from development environments to deployment infrastructures, must be secured. Implementing robust authentication, encrypting sensitive data, and securing communication channels are critical steps to safeguard against supply chain attacks.

AI-Powered Detection: Ironically enough, AI can detect anomalies and potential vulnerabilities in real-time by analyzing large datasets and identifying patterns indicative of attacks. AI-driven threat detection systems are crucial for processing real-time data, detecting anomalies, and predicting vulnerabilities before they are exploited.

Is It Hopeless?

Zero-day vulnerabilities in AI systems represent a significant challenge that necessitates innovative and proactive security measures.

Break those bespoke virtual walls in software development and rethink your AI SDLC.

Leveraging AI for detection, fostering a collaborative defense environment, and implementing comprehensive security practices are crucial for protecting AI assets from unknown threats. As AI continues to evolve, so too must our approaches to securing these critical systems.

By understanding and addressing these vulnerabilities, organizations can better protect their AI systems and ensure a more secure and reliable technological future. In the dynamic world of AI, the best defense is a well-prepared offense. Stay ahead of threats by continuously innovating and prioritizing security at every stage of development. The future of AI is a battleground—best make it a fortress. Good luck. 🍀

#AISecurity #ZeroDay #CyberSecurity #AIThreats #TechInnovation #DataProtection #Infosec #AIDevelopment #CyberDefense #TechSecurity #MLSecurity #AIResearch #SecureAI #FutureOfAI